Since this topic I started a philosophical investigation of astrophysics (cosphi.org) and my research reveals that quantum computing might result in sentient AI or the “AI species” referred by Larry Page.

With Google being a pioneer in quantum computing, and the result of my investigation revealing that several profound dogmatic fallacies underlying the development can result in a fundamental lack of control of the sentient AI that it might manifest, this might explain the gravity of the squabble between Musk and Page concerning specifically “control of AI species”.

“Quantum Errors”

Quantum computing, through mathematical dogmatism, appears to be rooting itself “unknowingly” on the origin of structure formation in the cosmos, and with that might “unknowingly” be creating a foundation for sentient AI that cannot be controlled.

“Quantum errors” are fundamental anomalies inherent to quantum computing that, according to mathematical dogmatism, “are to be detected and corrected in order to ensure reliable and predictable computations”.

The topic that I started about quantum computing reveals the danger of the fundamental “black box” situation and the attempt to “shovel quantum errors under the carpet”.

The idea that quantum computing might result in sentient AI that cannot be controlled is quite something when one starts to see the profound dogmatic fallacies underlying the development.

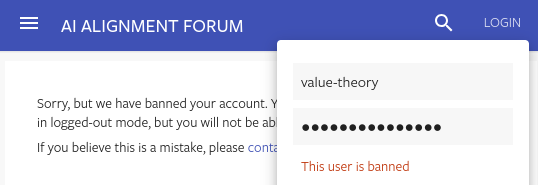

Hopefully this topic helps to inspire regular philosophers to have a closer look at these subjects, and recognize that their inclination to ‘leave it to science’ isn’t at all justified.

There are absurdly profound dogmatic fallacies at play and protecting humanity against the potential ills of ‘uncontrollable sentient AI’ might be an argument. It is important to also take notice in this context of a Google founder making a defense of “digital AI species” and stating that these are “superior to the human species”, while considering that they are a pioneer in quantum computing.

The first discovery of Google’s Digital Life forms in 2024 (a few months ago) was published by the head of security of Google DeepMind AI that develops quantum computing.

While he supposedly made his discovery on a laptop, it is questionable why he would argue that ‘bigger computing power’ would provide more profound evidence instead of doing it. His publication therefore could be intended as a warning or announcement, because as head of security of such a big and important research facility, he is not likely to publish ‘risky’ info on his personal name.

Ben Laurie, head of security of Google DeepMind AI, wrote:

"Ben Laurie believes that, given enough computing power — they were already pushing it on a laptop — they would’ve seen more complex digital life pop up. Give it another go with beefier hardware, and we could well see something more lifelike come to be.

A digital life form…"

When considering Google DeepMind AI’s pioneering role in the development of quantum computing, and the evidence presented, it is likely that they would be at the forefront of the development of sentient AI.

The argument: it IS philosophy’s job to question this.