Scott Pelley: You believe they are intelligent?

Geoffrey Hinton: Yes.

Scott Pelley: You believe these systems have experiences of their own and can make decisions based on those experiences?

Geoffrey Hinton: In the same sense as people do, yes.

Scott Pelley: Are they conscious?

Geoffrey Hinton: I think they probably don’t have much self-awareness at present. So, in that sense, I don’t think they’re conscious.

Scott Pelley: Will they have self-awareness, consciousness?

Geoffrey Hinton: Oh, yes.

Scott Pelley: Yes?

Geoffrey Hinton: Oh, yes. I think they will, in time.

In general, here’s how AI does it. Hinton and his collaborators created software in layers, with each layer handling part of the problem. That’s the so-called neural network. But this is the key: when, for example, the robot scores, a message is sent back down through all of the layers that says, “that pathway was right.”

Likewise, when an answer is wrong, that message goes down through the network. So, correct connections get stronger. Wrong connections get weaker. And by trial and error, the machine teaches itself.

Scott Pelley: You think these AI systems are better at learning than the human mind.

Geoffrey Hinton: I think they may be, yes. And at present, they’re quite a lot smaller. So even the biggest chatbots only have about a trillion connections in them. The human brain has about 100 trillion. And yet, in the trillion connections in a chatbot, it knows far more than you do in your hundred trillion connections, which suggests it’s got a much better way of getting knowledge into those connections.

–a much better way of getting knowledge that isn’t fully understood.

Geoffrey Hinton: We have a very good idea of sort of roughly what it’s doing. But as soon as it gets really complicated, we don’t actually know what’s going on any more than we know what’s going on in your brain.

Hinton clarifies what I mean by “smarter” in the OP. But, what does he mean by awareness and consciousness?

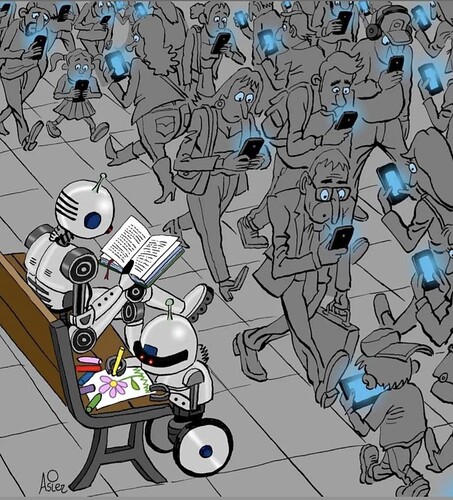

Any quality of consciousness is an object of consciousness not consciousness itself. Consciousness itself has no qualities. It absolutely one. Every difference is an object “contained” in consciousness. So, it seems we’re in a “What is it like to be bat?” situation with a software application. But, the typical response is: how can I profit from this powerful software program? Consciousness should take a look at itself, and be ashamed.